四足機器人導航可以采用多種方案,對於小型室內機器人更多的會采用2D雷達執行SLAM演算法實作地圖的構建,典型的地圖演算法包括HectorMap、Gmapping,由Google推出的 Cartographer SLAM是目前公認效果較好的2D 建圖和定位演算法。其可以融合雷達、IMU和機器人裏程計,采用圖最佳化因此相比前兩個SLAM演算法具有更好的建圖效果,目前室內掃地機器人或輪式底盤都會采用該方案。

對於輪式機器人由於僅關心自己相對地面很低高度範圍內的避障問題,因此需要將2D雷達布置於較低位置來對障礙物進行探測。

但是對於四足機器人來說,由於四足機器人本身的站立高度,使得2D雷達建圖存在如下的幾個特點:

1)成本低:采用2D雷達結合足式裏程計可以實作室內環境下較好的定位和建圖效果,並進一步用於機器人導航,2D雷達低成本相比視覺也更可靠;

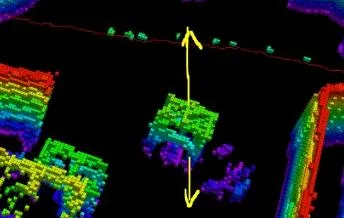

2)僅能建立機體高度平面的占據地圖:由於雷達往往安裝在四足機器人背面,因此僅能建立該平面高度的占據地圖,僅采用2D雷達避障無法實作躲避低矮的障礙物;

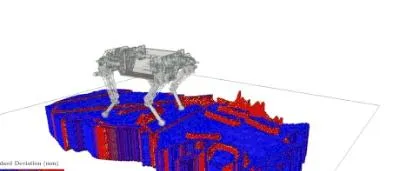

綜上,對於四足機器人來說除了保證機體層導航安全外,對於低矮障礙物如門檻、盒子、鞋子等室內物品,僅依靠2D雷達會導致機器人踩踏和摔倒,因此四足機器人對於局部地圖的資訊更加關註,對於非結構地形需要主動落足跨越或者躲避障礙物,比較有代表的為ETH開源的elevation map計畫。

1 2D雷達融合局部地圖

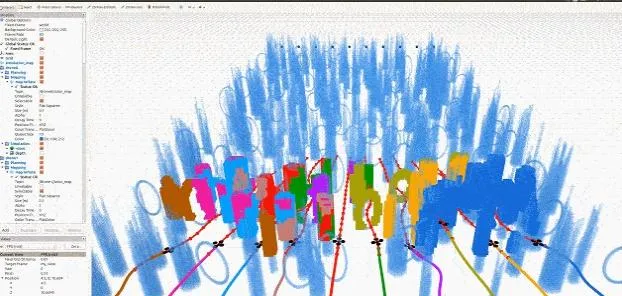

Ego Planner浙大FAST LAB開源的路徑規劃演算法,其包含了基於GridMap和占據地圖的局部地圖,借助簡單的視覺和裏程計數據就可以實作線上動態軌跡規劃, 並控制無人機完成樹林穿越、狹窄圓環等特技穿越飛行 ,最新推出的swarm集群版本更可以支持多機器人的集群飛行。

標準的Ego Planner采用Vins作為視覺裏程計並采用Realsense深度點雲來構建局部地圖。本計畫采用2D雷達來代替Vins采用Ego Planner完成對局部地圖的構建。

2D雷達完成 :全域占據地圖構建、全域路徑導航;

Ego Planner完成: 對局部地圖的構建並完成對低矮障礙物局部路徑規劃;

存在的問題 :由於Ego Planner針對無人機其可以在空間高度上實作3維軌跡的規劃,因此無法滿足機器人繞行的需求,很多時候會采用跨越飛行來實作!

2 Ego Planner基本介紹

本計畫,采用Cartographer使用2 D雷達和機器人IMU、裏程計 完成全域地圖構建和自身導航定位,並為Ego Planner提供Odom與TF定位資訊,透過D435提供的深度影像實作局部地圖構建。由於Ego Planner預設采用Map作為座標系,因此如果采用雷達導航需要釋出下世界和地圖固定變換:

<node pkg="tf" type="static_transform_publisher" name="map_to_world"

args="0 0 0 0 0 0 /map /world 100">

</node>

(1)話題對映

規劃器訂閱話題參數在advanced_param.xml中,需要增蓋瑞程計、相機點雲、相機位姿和深度相機影像:

<remap from="~grid_map/odom" to="/odom_map"/>

<remap from="~grid_map/cloud" to="/camera/depth/color/points"/>

<remap from="~grid_map/pose" to = "/odom_pose"/>

<remap from="~grid_map/depth" to = "/camera/depth/image_rect_raw"/>

Ego_planner在源碼中可以看到采用了兩套話題方法透過pose_type選擇,0采用前面兩個話題即相機的裏程計和點雲數據,但是直接訂閱點雲會使得數據量很大;1采用後者,則需要提供深度相機的位姿數據,直接采用深度相機的影像:

<param name="grid_map/pose_type" value="1"/>

<param name="grid_map/frame_id" value="map"/>

具體實作在grid_map.cpp中:

/mp_.pose_type_ = POSE_STAMPED ;

if (mp_.pose_type_ == POSE_STAMPED)

{

pose_sub_.reset(

new message_filters::Subscriber<geometry_msgs::PoseStamped>(node_, "grid_map/pose", 50));

sync_image_pose_.reset(new message_filters::Synchronizer<SyncPolicyImagePose>(

SyncPolicyImagePose(100), *depth_sub_, *pose_sub_));

sync_image_pose_->registerCallback(boost::bind(&GridMap::depthPoseCallback, this, _1, _2));

std::cout<<"Pose mode"<<endl;

}

else if (mp_.pose_type_ == ODOMETRY)//

{

odom_sub_.reset(new message_filters::Subscriber<nav_msgs::Odometry>(node_, "grid_map/odom", 100, ros::TransportHints().tcpNoDelay()));

sync_image_odom_.reset(new message_filters::Synchronizer<SyncPolicyImageOdom>(

SyncPolicyImageOdom(100), *depth_sub_, *odom_sub_));

sync_image_odom_->registerCallback(boost::bind(&GridMap::depthOdomCallback, this, _1, _2));

std::cout<<"Odom mode"<<std::endl;

}

(2)采用2D雷達TF釋出Odom數據

為了實作高即時的建圖一般采用ODOMETRY的定位方式,但是其需要提供相機在世界座標系下的位姿,對於雷達定位來說一般只能產生TF變換並不能得到odom的話題,因此需要自己計算並釋出。方法是計算相機TF相對MAP的TF變換並按Odom訊息型別進行釋出:

pose_pub = n.advertise<geometry_msgs::PoseStamped>("/odom_pose", 10, true);

odom_pub = n.advertise<nav_msgs::Odometry>("/odom_map", 10);

//tf::TransformListener listener;

tf::TransformListener listener(ros::Duration(5));//緩沖5s防止報錯

ros::Timer timer=n.createTimer(ros::Duration(0.01),boost::bind(&transformPoint, boost::ref(listener)));//0.01s 100Hz呼叫一次監聽器

采用listener監聽TF變換:

void transformPoint(const tf::TransformListener &listener) {//監聽TF 獲取機器人質心的位置

try{

tf::StampedTransform transform;

//得到座標odom和座標base_link之間的關系

listener.waitForTransform("map", "camera_link_odom", ros::Time(0), ros::Duration(0.005));

listener.lookupTransform("map", "camera_link_odom",

ros::Time(0), transform);

printTf_camera(transform);

}

catch (tf::TransformException &ex) {

//ROS_ERROR("%s",ex.what());

}

}

釋出Odom訊息:

void printTf_camera(tf::Transform tf) {

//cout<<"vector from reference frame to to child frame: "<<tfVec.getX()<<","<<tfVec.getY()<<","<<tfVec.getZ()<<endl;

geometry_msgs::PoseStamped position_3d;

position_3d.pose.position.x = tf.getOrigin().getX();

position_3d.pose.position.y = tf.getOrigin().getY();

position_3d.pose.position.z = tf.getOrigin().getZ();//odom_3d->pose.pose.position.z;

if( position_3d.pose.position.z<0.1)

position_3d.pose.position.z=0.1;

position_3d.pose.orientation.x = tf.getRotation().x();

position_3d.pose.orientation.y = tf.getRotation().y();

position_3d.pose.orientation.z = tf.getRotation().z();

position_3d.pose.orientation.w = tf.getRotation().w();

position_3d.header.stamp = ros::Time::now();

position_3d.header.frame_id = "map";

pose_pub.publish(position_3d);

nav_msgs::Odometry odom;

odom.header.stamp = ros::Time::now();

odom.header.frame_id = "map";

//set the position

odom.pose.pose.position.x = position_3d.pose.position.x;

odom.pose.pose.position.y = position_3d.pose.position.y;

odom.pose.pose.position.z = position_3d.pose.position.z;

odom.pose.pose.orientation = position_3d.pose.orientation;

//set the velocity

odom.child_frame_id = "base_link";

odom.twist.twist.linear.x = 0;

odom.twist.twist.linear.y = 0;

odom.twist.twist.angular.z = 0;

//odom_pub.publish(odom);

}

下面兩個參數決定了局部地圖在機器人後端消失的部份,margin越小則越快消失,ground_height則決定了地面建模高度,對於不想考慮的低矮障礙物可以采用該值進行遮蔽:

<param name="grid_map/local_map_margin" value="15"/>

<param name="grid_map/ground_height" value="0.05"/>

3 Ego Planner面向地面機器人的簡單修改

如前文所述,Ego Planner原始是用於無人機3D軌跡規劃的,因此對於低矮的障礙物無人機是可以從上方飛越的,而對於地面機器人來說其只能繞過障礙物,因此需要對原始的建圖機制進行修改,Ego Planner采用Grid Map管理局部地圖並進行膨脹和軌跡規劃。

我采用了一種比較簡單的方法,原始建圖演算法實際可以建立出空間中的障礙物, 因此我們只需要將該點沿著豎直方向進行擴充套件 ,與地面連線、與虛擬天花板連線。這樣最終軌跡規劃就能約束不會從障礙物上方越過, 而是實作繞行 ,進一步透過約束地面高度,則可以濾除一些較為矮小即四足機器人透過步態可以透過的障礙物:

(1)占據柵格修改

則簡單的修改方式在Grid_map.cpp裏完成對障礙物膨脹的程式碼,基本的原理就是在每個障礙物XY座標位置進行高度迴圈將占據標誌位設定為1,md_.occupancy_buffer_inflate_[idx_inf] :

// inflate obstacles

for (int x = md_.local_bound_min_(0); x <= md_.local_bound_max_(0); ++x)

for (int y = md_.local_bound_min_(1); y <= md_.local_bound_max_(1); ++y)

for (int z = md_.local_bound_min_(2); z <= md_.local_bound_max_(2); ++z)

{

if (md_.occupancy_buffer_[toAddress(x, y, z)] > mp_.min_occupancy_log_)

{

#if 1//doghome for ground robot

for (int z1 = 0; z1 <= 100; ++z1){

inflatePoint(Eigen::Vector3i(x, y, z1*mp_.resolution_*2), inf_step, inf_pts);

for (int k = 0; k < (int)inf_pts.size(); ++k)

{

inf_pt = inf_pts[k];

int idx_inf = toAddress(inf_pt);

if (idx_inf < 0 ||

idx_inf >= mp_.map_voxel_num_(0) * mp_.map_voxel_num_(1) * mp_.map_voxel_num_(2))

{

continue;

}

md_.occupancy_buffer_inflate_[idx_inf] = 1;

}

}

#else

inflatePoint(Eigen::Vector3i(x, y, z), inf_step, inf_pts);

for (int k = 0; k < (int)inf_pts.size(); ++k)

{

inf_pt = inf_pts[k];

int idx_inf = toAddress(inf_pt);

if (idx_inf < 0 ||

idx_inf >= mp_.map_voxel_num_(0) * mp_.map_voxel_num_(1) * mp_.map_voxel_num_(2))

{

continue;

}

md_.occupancy_buffer_inflate_[idx_inf] = 1;

}

#endif

}

}

另外在釋出占據地圖時可以進行如下修改,避免對地面區域和虛擬天花板的顯示,在Rviz裏僅顯示障礙物的膨脹柵格:

void GridMap::publishMapInflate(bool all_info)

{

if (map_inf_pub_.getNumSubscribers() <= 0)

return;

pcl::PointXYZ pt;

pcl::PointCloud<pcl::PointXYZ> cloud;

Eigen::Vector3i min_cut = md_.local_bound_min_;

Eigen::Vector3i max_cut = md_.local_bound_max_;

if (all_info)

{

int lmm = mp_.local_map_margin_;

min_cut -= Eigen::Vector3i(lmm, lmm, lmm);

max_cut += Eigen::Vector3i(lmm, lmm, lmm);

}

boundIndex(min_cut);

boundIndex(max_cut);

for (int x = min_cut(0); x <= max_cut(0); ++x)

for (int y = min_cut(1); y <= max_cut(1); ++y)

for (int z = min_cut(2); z <= max_cut(2); ++z)

{

if (md_.occupancy_buffer_inflate_[toAddress(x, y, z)] == 0)

continue;

Eigen::Vector3d pos;

indexToPos(Eigen::Vector3i(x, y, z), pos);

if (pos(2) > mp_.visualization_truncate_height_

||pos(2)<mp_.map_draw_range_min||pos(2)>mp_.map_draw_range_max

)//doghome

continue;

#if 0//for ground robot

for (int z1 = 0; z1 <= 15; ++z1){

pt.x = pos(0);

pt.y = pos(1);

pt.z = z1* mp_.resolution_;

cloud.push_back(pt);

}

#else

pt.x = pos(0);

pt.y = pos(1);

pt.z = pos(2);

#endif

cloud.push_back(pt);

}

cloud.width = cloud.points.size();

cloud.height = 1;

cloud.is_dense = true;

cloud.header.frame_id = mp_.frame_id_;

sensor_msgs::PointCloud2 cloud_msg;

pcl::toROSMsg(cloud, cloud_msg);

map_inf_pub_.publish(cloud_msg);

// ROS_INFO("pub map");

}

(2)四足機器人軌跡跟蹤控制

上面完成了對地圖部份的修改,即讓機器人可以對任意障礙物進行處理從而解決地面機器人繞行的需求,對於其他的運動軌跡如機器人鉆過一個門洞也只需要限制膨脹柵格的方式就行,但是要實作機器人的軌跡控制仍然是不行的。Ego Planner原始規劃模型是面向四軸飛行器,在軌跡控制是直接產生位置、速度和加速度前饋指令:

pos = traj_[0].evaluateDeBoorT(t_cur);

vel = traj_[1].evaluateDeBoorT(t_cur);

acc = traj_[2].evaluateDeBoorT(t_cur);

因此隨著時間的遞增,期望指令會不斷向前移動,而四足機器人由於模型不一致,雖然也是一個全向平台但是難以實作對軌跡的跟蹤,因此這裏采用了預瞄點+PID的簡單處理,即透過獲取當前機器人位置前方的軌跡目標進行PID控制產生機器人的機體速度和轉向速度,從而實作對軌跡的跟蹤。由於需要反饋控制首先需要采用釋出相機位姿的相同方法釋出機體TF的變換Odom並在traj_server中進行訂閱:

void odomCallback(const nav_msgs::Odometry::ConstPtr& odom_3d)

{

nav_msgs::Odometry position_3d;

position_3d.pose.pose.position.x = odom_3d->pose.pose.position.x;

position_3d.pose.pose.position.y = odom_3d->pose.pose.position.y;

position_3d.pose.pose.position.z = odom_3d->pose.pose.position.z;

position_3d.pose.pose.orientation = odom_3d->pose.pose.orientation;

position_3d.header.stamp = odom_3d->header.stamp;

position_3d.header.frame_id = "map";

odom_base=position_3d;

tf::Quaternion quat;

tf::quaternionMsgToTF(odom_3d->pose.pose.orientation, quat);

//定義儲存r\p\y的容器

tf::Matrix3x3(quat).getRPY(roll, pitch, yaw);//進行轉換

//std::cout << roll*57.3<< ' ' <<pitch*57.3 << ' ' << yaw *57.3<< std::endl;

//std::cout << odom_3d -> header.stamp << ' ' << odom_3d->pose.pose.position.x << ' ' << odom_3d->pose.pose.position.y << ' ' << odom_3d->pose.pose.position.z << std::endl;

}

則對軌跡控制函式進行修改,釋出cmd_vel指令:

void cmdCallback(const ros::TimerEvent &e)

{

/* no publishing before receive traj_ */

geometry_msgs::Twist cmd_vel;

if (!receive_traj_){

cmd_vel.linear.x=0;

cmd_vel.linear.y=0;

cmd_vel.linear.z=0;

cmd_vel.angular.z=0;

cmd_vel_pub.publish(cmd_vel);

return;

}

ros::Time time_now = ros::Time::now();

double t_cur = (time_now - start_time_).toSec();

Eigen::Vector3d pos(Eigen::Vector3d::Zero()), vel(Eigen::Vector3d::Zero()), acc(Eigen::Vector3d::Zero()), pos_f;

std::pair<double, double> yaw_yawdot(0, 0);

static ros::Time time_last = ros::Time::now();

if (t_cur < traj_duration_ && t_cur >= 0.0)

{

pos = traj_[0].evaluateDeBoorT(t_cur);

vel = traj_[1].evaluateDeBoorT(t_cur);

acc = traj_[2].evaluateDeBoorT(t_cur);

/*** calculate yaw ***/

yaw_yawdot = calculate_yaw(t_cur, pos, time_now, time_last);

/*** calculate yaw ***/

double tf = min(traj_duration_, t_cur + 2.0);

pos_f = traj_[0].evaluateDeBoorT(tf);

}

else if (t_cur >= traj_duration_)

{

/* hover when finish traj_ */

pos = traj_[0].evaluateDeBoorT(traj_duration_);

vel.setZero();

acc.setZero();

yaw_yawdot.first = last_yaw_;

yaw_yawdot.second = 0;

pos_f = pos;

}

else

{

cout << "[Traj server]: invalid time." << endl;

}

time_last = time_now;

cmd.header.stamp = time_now;

cmd.header.frame_id = "world";

cmd.trajectory_flag = quadrotor_msgs::PositionCommand::TRAJECTORY_STATUS_READY;

cmd.trajectory_id = traj_id_;

cmd.position.x = pos(0);

cmd.position.y = pos(1);

cmd.position.z = pos(2);

cmd.velocity.x = vel(0);

cmd.velocity.y = vel(1);

cmd.velocity.z = vel(2);

cmd.acceleration.x = acc(0);

cmd.acceleration.y = acc(1);

cmd.acceleration.z = acc(2);

cmd.yaw = yaw_yawdot.first;

cmd.yaw_dot = yaw_yawdot.second;

last_yaw_ = cmd.yaw;

pos_cmd_pub.publish(cmd);

#if 0

//spd controll

float temp[3]={cmd.velocity.x,cmd.velocity.y,0};

float xd=cos(-yaw)*temp[0]-sin(-yaw)*temp[1];

float yd=sin(-yaw)*temp[0]+cos(-yaw)*temp[1];

cmd_vel.linear.x=xd;

cmd_vel.linear.y=yd;

cmd_vel.linear.z=0;//cmd.velocity.x ;

cmd_vel.angular.z=yaw_yawdot.second;

#else

//pose control

float traj_dt=0.1;

float dis=0;

float dis_min=99;

int id_closet=0;

float pos_now[3]={odom_base.pose.pose.position.x,odom_base.pose.pose.position.y,odom_base.pose.pose.position.z};

float pos_tart[3];

for(int i=0;i<100;i++){

double t_cur = traj_dt*i;

if (t_cur < traj_duration_ && t_cur >= 0.0)

{

pos = traj_[0].evaluateDeBoorT(t_cur);

dis=sqrt((pos[0]-pos_now[0])*(pos[0]-pos_now[0])+(pos[1]-pos_now[1])*(pos[1]-pos_now[1]));

if(dis<dis_min)

{

dis_min=dis;

id_closet=i;

}

}else

break;

}

float t_forward=time_forward_;

t_cur = traj_dt*id_closet+t_forward;

if(t_cur>traj_duration_)

t_cur=traj_duration_;

pos = traj_[0].evaluateDeBoorT(t_cur);

pos_tart[0]=pos[0];

pos_tart[1]=pos[1];

//spd controll

float temp[3]={0,0,0};

temp[0]=limit_ws(pos_tart[0]-pos_now[0],-0.25,0.25)*kp_pos;

temp[1]=limit_ws(pos_tart[1]-pos_now[1],-0.25,0.25)*kp_pos;

temp[0]=limit_ws(temp[0],-max_spd_x,max_spd_x);

temp[1]=limit_ws(temp[1],-max_spd_x,max_spd_x);

float xd=cos(-yaw)*temp[0]-sin(-yaw)*temp[1];

float yd=sin(-yaw)*temp[0]+cos(-yaw)*temp[1];

#if 0

t_forward=time_forward_;

t_cur = traj_dt*id_closet+t_forward;

if(t_cur>traj_duration_)

t_cur=traj_duration_;

pos = traj_[0].evaluateDeBoorT(t_cur);

pos_tart[0]=pos[0];

pos_tart[1]=pos[1];

#endif

double yaw_temp = atan2(pos_tart[1]-pos_now[1], pos_tart[0]-pos_now[0]);

float yaw_spd=To_180_degreesw(yaw_temp*57.3-yaw*57.3)/57.3*kp_yaw;

yaw_spd=limit_ws(yaw_spd,-max_spd_yaw,max_spd_yaw);

float yaw_weight=0;

yaw_weight=limit_ws(fabs(yaw_spd/0.4),0,1);

if(yaw_weight<0.2)

yaw_weight=0;

xd=limit_ws(xd,-max_spd_x,max_spd_x);

yd=limit_ws(yd,-max_spd_y,max_spd_y);

cmd_vel.linear.x=xd*(1-yaw_weight)*1;

cmd_vel.linear.y=yd*(1-yaw_weight)*1;

cmd_vel.linear.z=0;

pos = traj_[0].evaluateDeBoorT(traj_duration_);

dis=sqrt((pos[0]-pos_now[0])*(pos[0]-pos_now[0])+(pos[1]-pos_now[1])*(pos[1]-pos_now[1]));

if(fabs(dis)>0.1)

cmd_vel.angular.z=yaw_spd;

else

cmd_vel.angular.z=0;

if(fabs(dis)<0.025)

{

cmd_vel.linear.x=cmd_vel.linear.y=0;//yd*(1-yaw_weight)*1;

cmd_vel.linear.z=0;

}

#endif

//ROS_INFO("%f %f",t_cur,traj_duration_);

//ROS_INFO("Linear Components:%f [%f,%f,%f]",yaw_weight,cmd_vel.linear.x,cmd_vel.linear.y,-cmd_vel.linear.z);

//ROS_INFO("Angular Components:[%f,%f,%f]",cmd_vel.angular.x,cmd_vel.angular.y,cmd_vel.angular.z);

cmd_vel_pub.publish(cmd_vel);

}

(3)軌跡持續重規劃

最後還有一點,就是Ego Planner並不是一值周期重規劃,其除了用一個固定周期重規劃外,主要是依賴軌跡跟蹤的偏差,即機器人偏離軌跡過大後才會重規劃,而地面機器人由於需要即時重規劃,因此需要對其replan_fsm狀態機進行修改:

case EXEC_TRAJ:

{

/* determine if need to replan */

//en_replan=0;

replan_cnt++;

LocalTrajData *info = &planner_manager_->local_data_;

ros::Time time_now = ros::Time::now();

double t_cur = 0.0;// 0;//(time_now - info->start_time_).toSec();//doghome

t_cur = min(info->duration_, t_cur);

double t_cur1 = (time_now - info->start_time_).toSec();//doghome

t_cur1 = min(info->duration_, t_cur1);

Eigen::Vector3d pos = info->position_traj_.evaluateDeBoorT(t_cur);

/* && (end_pt_ - pos).norm() < 0.5 */

if ((target_type_ == TARGET_TYPE::PRESET_TARGET) &&

(wp_id_ < waypoint_num_ - 1) &&

(end_pt_ - pos).norm() < no_replan_thresh_)

{

wp_id_++;

planNextWaypoint(wps_[wp_id_]);

}

else if ((local_target_pt_ - end_pt_).norm() < 0.15) // close to the global target doghome

{

if (t_cur1 > info->duration_ - 1e-2)

{

have_target_ = false;

have_trigger_ = false;

if (target_type_ == TARGET_TYPE::PRESET_TARGET)

{

wp_id_ = 0;

planNextWaypoint(wps_[wp_id_]);

}

std::cout<<"Reaching Waypoints_"<<std::endl;

changeFSMExecState(WAIT_TARGET, "FSM");

goto force_return;

// return;

}

else if ((end_pt_ - pos).norm() > no_replan_thresh_ && t_cur1 > replan_thresh_)

{

changeFSMExecState(REPLAN_TRAJ, "FSM");

}

}

else if (t_cur1 > replan_thresh_)

{

changeFSMExecState(REPLAN_TRAJ, "FSM");

replan_cnt=0;

std::cout<<"replan_cnt replan_thresh_"<<std::endl;

}

break;

}

即在軌跡規劃執行中增加周期計數判斷,讓狀態機跳回重規劃部份,同時重規劃一直采用全域重規劃函式:

case REPLAN_TRAJ://moshi

{

bool success = planFromGlobalTraj(20); // zx-todo

if (success)

{

changeFSMExecState(EXEC_TRAJ, "FSM");

flag_escape_emergency_ = true;

publishSwarmTrajs(false);

}

break;

}

4 測試效果

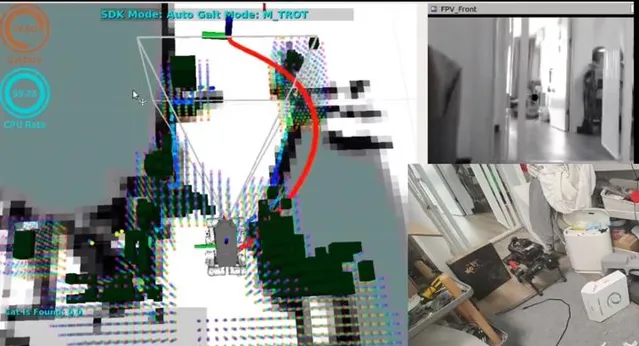

最終,采用該方案在HelloRobotic的Tinymal機器人平台上進行了測試,實作了2D雷達和視覺互補下的室內導航,借助雷達低成本導航定位的優勢同時,補償了其無法檢測低矮障礙物並實作避障的問題:

相關源碼下載請參考: