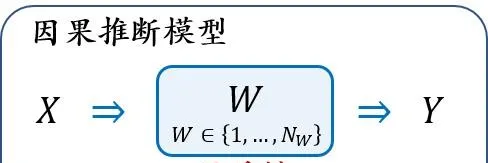

Definitions:

Unit : 研究個體, 在治療手段效果研究中指每個病人樣本.

Treatment/Intervention : 治療/幹預, 對每個研究物件施加的動作, 這裏是治療手段. W\in\{1,2,\dots,N_W\}

Potential Outcome : 對於每對 個體-治療, 將治療手段作用於病人後的可能輸出. Y(W=w)

Observed Outcome

: 觀測到的獎治療手段實際作用於某病人後得到的輸出.

Y^F=Y(W=w)

, w

為實際采用的某治療手段.

Counterfactual Outcome : 反事實輸出, 病人采用未實際使用的治療手段得到的輸出. Y^{CF}=Y(W=w')=Y(W=1-w)

Pre-treatment Variables : 不被治療或幹預影響到的變量, 比如病人的人口統計數據(demographics)、病歷. X

Post-treatment Variables : 被治療或幹預影響到的變量, 比如病人的臨床表現、醫學檢查結果.

Instrumental variables : 只影響治療手段分配不影響輸出的變量

Treatment Effects

用於衡量某種治療手段或者幹預是否起到效果.

Average Treatment Effect (ATE):

\textrm{ATE}=\mathbb{E}[Y(W=1) - Y(W=0)]

Average Treatment effect on the Treated group (ATT): \textrm{ATT}=\mathbb{E}[Y(W=1)|W=1] - \mathbb{E}[Y(W=0)|W=1]

Conditional Average Treatment Effect (CATE):

\textrm{ATT}=\mathbb{E}[Y(W=1)|X=x] - \mathbb{E}[Y(W=0)|X=x]

Individual Treatment Effect (ITE):

\textrm{ATE}=Y_i(W=1) - Y_i(W=0)

Goal: 根據觀測到的數據 {X_i,W_i,Y_i^F}_{i=1}^N 去估計上述的treatment effects.

可以看到由於互斥性, 我們無法同時觀測到不同治療手段作用於同一個樣本上的效果, 同時,在實際生活中W 是 非隨機分配 (不能按照隨機對照試驗來對比), 這樣就導致直接估算會因受到selection bias的影響而產生不準確的效果估計.

為實作估計每種治療手段效果的目標, 我們需要解決兩個問題:

(1) 如何利用觀測到的 emperical outcome 去估計 potential outcome?

(2) 如何處理 treatment effect estimate 過程中涉及到的 counterfactual outcome?

Assumptions:

Assumption 1. Stable Unit Treatment Value Assumption (SUTVA): The potential outcomes for any unit do not vary with the treatment assigned to other units, and, for each unit, there are no different forms or versions of each treatment level, which lead to different potential outcomes. 不同病人之間、某病人不同治療手段之間不存在依賴.

Assumption 2. Ignorability: Given the background variable, X , treatment assignment W is independent to the potential outcomes, i.e., W\perp\kern-5pt\perp Y(W=0), Y(W=1)|X

Assumption 3. Positivity: For any value of X , treatment assignment is not deterministic: P(W=w|X=x)>0, ~~\forall~w~\textrm{and}~x. 每種治療方式都會被分配到.

基於 Assumption 1 & 2, 我們可以解決第一個問題, 建立對 potential outcome 的 unbiased emperical estimator: \begin{split} \mathbb{E}[Y(W=w)|X=x] &= \mathbb{E}[Y(W=w)|W=w,X=x]~~(\textrm{Ignorability}) \\ &= \mathbb{E}[Y^F|W=w,X=x] \end{split}

對於第二個問題可以使用 kernel-based method 來解決 (Counterfactual Mean Embeddings)

Confounders

Confounders : 混雜因素, 那些既影響treatment又影響outcome的變量.

Selection bias : 觀測到的group和感興趣的group不一致 p(X_{obs})\neq p(X_\ast) .

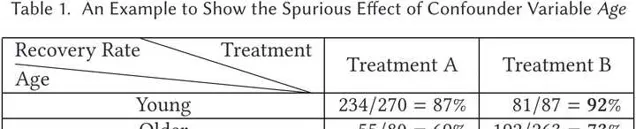

在這個樣本中confounder為年齡, 所以忽略年齡直接看整體的治療效果是treatment A好於treatment B, 但B在每個young和old的兩個groups中的表現均優於A. 在這種情況下忽略confounder引起來的兩種治療手段在測試所選擇的分組年齡分布上的差異 (selection bias), 就會得到錯誤的治療效果評估.

selection bias <=> covariate shift \quad p(X_{obs})\neq p(X_\ast)Adjust confounders

先估計對confounder的條件概率分布, 然後對根據confounder的分布進行概率平均.

\begin{split} \hat{\textrm{ATE}} &= \sum_x p(x)\mathbb{E}[Y^F|X=x,W=1] - \sum_x p(x)\mathbb{E}[Y^F|X=x,W=0] \\ &= \sum_{\mathcal{X}^\ast} p(X\in\mathcal{X}^\ast) \left(\frac{1}{N_{\{i:X_i\in\mathcal{X}^\ast,W_i=1\}}} \sum_{\{i:X_i\in\mathcal{X}^\ast, W_i=1\}} Y_i^F \right) \\ &\quad - \sum_{\mathcal{X}^\ast} p(X\in\mathcal{X}^\ast) \left(\frac{1}{N_{\{j:X_j\in\mathcal{X}^\ast,W_j=1\}}} \sum_{\{j:X_j\in\mathcal{X}^\ast, W_j=0\}} Y_j^F \right) \end{split}

where \mathcal{X}^\ast is a set of X values, p(X\in\mathcal{X}^\ast) is the probability of the background variables in \mathcal{X}^\ast over the whole population, {i:x_i\in\mathcal{X}^\ast,W_i=w} is the subgroup of units whose background variable values belong to \mathcal{X}^\ast and treatment is equal to x .

Causal Inference Methods relying on Three Assumptions

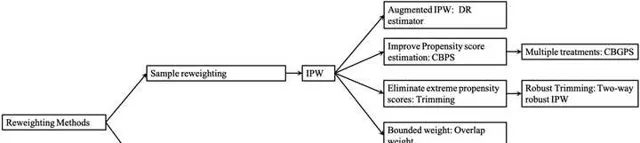

Re-weighting Methods

Propensity Score: e(x)=Pr(W=1|X=x)

Propensity Score Based Sample Re-weighting

1. Inverse propensity weighting (IPW) : 對每個樣本施加一個權重 r : r=\frac{W}{e(x)}-\frac{1-W}{1-e(x)}

IPW estimator:

\hat{\textrm{ATE}}_{IPW} = \frac{1}{n}\sum_{i=1}^n\frac{W_iY_i^F}{\hat{e}(x_i)} - \frac{1}{n}\sum_{i=1}^n \frac{(1-W_i)Y_i^F}{1-\hat{e}(x_i)}.

在實際使用時, 常使用其正則化的版本:

\hat{\textrm{ATE}}_{IPW} = \frac{1}{n}\sum_{i=1}^n\frac{W_iY_i^F}{\hat{e}(x_i)}/\frac{1}{n}\sum_{i=1}^n\frac{W_i}{\hat{e}(x_i)} - \frac{1}{n}\sum_{i=1}^n\frac{(1-W_i)Y_i^F}{1-\hat{e}(x_i)} / \frac{1}{n}\sum_{i=1}^n \frac{(1-W_i)}{1-\hat{e}(x_i)}.

2. Doubly Robust (DR) estimator / Augmented IPW:

透過將 propensity score 與 outcome regression 結合來解決對propensity score的估計不準確時較大的ATE估計誤差. \begin{split} \hat{\textrm{ATE}}_{DR} &= \frac{1}{n}\sum_{i=1}^n\left\{\left[\frac{W_iY_i^F}{\hat{e}(x_i)} - \frac{W_i-\hat{e}(x_i)}{\hat{e}(x_i)}\hat{m}(1,x_i)\right] - \left[\frac{(1-W_i)Y_i^F}{1-\hat{e}(x_i)} - \frac{W_i-\hat{e}(x_i)}{1-\hat{e}(x_i)}\hat{m}(0,x_i) \right]\right\} \\ &= \frac{1}{n}\sum_{i=1}^n\left\{\hat{m}(1,x_i) + \frac{W_i(Y_i^F-\hat{m}(1,x_i))}{\hat{e}(x_i)} - \hat{m}(0,x_i)\frac{(1-W_i)(Y_i^F-\hat{m}(0,x_i))}{1-\hat{e}(x_i)} \right\} \\ \end{split} 這裏的 \hat{m}(1,x_i) 和 \hat{m}(0,x_i) 分別是對治療組和對照組的回歸模型估計.

3. covariate balancing propensity score (CBPS):

直接提升對propensity score的估計

\mathbb{E}\left[\frac{W_i\tilde{x}_i}{e(x_i;\beta)} - \frac{(1-W_i)\tilde{x}_i}{1-e(x_i;\beta)}\right]=0

where \tilde{x}_i is a predefined vector-valued measurable function of x_i .

Confounder Balancing

Data-Driven Variable Decomposition ( \textrm{D}^2\textrm{VD} ) :

all observed variables = adjustment variables (可由outcome預測的變量) || confounders || irrelevant variables.

Adjusted outcome:

Y^\ast_{\mathrm{D^2VD}} = \left(Y^F-\phi(\mathbf{z})\right) \frac{W-p(x)}{p(x)(1-p(x))}

\mathbf{z} 對應於 adjustment variables.

ATE estimator for \textrm{D}^2\textrm{VD} :

\textrm{ATE}_{\mathrm{D^2VD}} = \mathbb{E}\left[\left(Y^F-\phi(\mathbf{z})\right)\frac{W-p(x)}{p(x)-(1-p(x))}\right]

\begin{split} \textrm{minimize}&~||(Y^F-X\alpha)\odot R(\beta)-X\gamma||^2_2,\\ &\textrm{s.t.}\quad \sum_{i=1}^N \log(1+\exp(1-2W_i)\cdot X_i\beta) < \tau,\\ &||\alpha||_1\leq\lambda,~||\beta||_1\leq\delta,~||\gamma||_1\leq\eta,~||\alpha\odot\beta||_2^2=0 \end{split}

這裏 \alpha 分離出 adjustment variables, \beta 分離出 confounders, \gamma 消除 irrelevant variables.

Stratification Methods

將treated group和control group劃分為同質的子群, 這樣他們在每個子群內可被看作隨機對照試驗. 這樣對每個子群估算CATE後,可透過聚合操作得到整體的ATE.

分治的思想, 整體使用統一的measure無法當作隨機試驗, 劃分子群後采用不同的measure可看作隨機試驗.

\textrm{ATE}_{\textrm{start}} = \hat\tau^{\textrm{start}} = \sum_{j=1}^J q(j) \left[\bar{Y}_t(j) - \bar{Y}_c(j)\right]

where q(j)=\frac{N(j)}{N} .

劃分子群的方式主要是 equal frequency approach, 將出現頻率相同的歸為同一組.

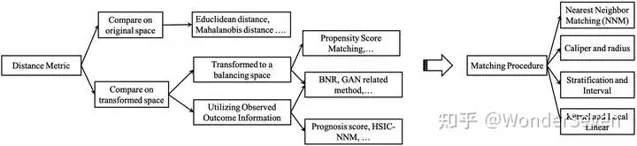

Matching Methods

在觀測到的group裏找相近的樣本來近似對counterfactual的估計.

\hat{Y}_i(0) = \left\{ \begin{array}{rcl} & Y_i & \textrm{if} & W_i=0, \\ & \frac{1}{\#\mathcal{J}(i)}\sum_{l\in\mathcal{J}(i)}Y_l & \textrm{if} & W_i=1; \end{array}\right. and \hat{Y}_i(1) = \left\{ \begin{array}{rcl} & \frac{1}{\#\mathcal{J}(i)}\sum_{l\in\mathcal{J}(i)}Y_l & \textrm{if} & W_i=0; \\ & Y_i & \textrm{if} & W_i=1; \end{array}\right.

where \mathcal{J}(i) is the matched neighbors of unit i in the opposite treatment group.

1. Distance Metric:

D(\mathbf{x}i, \mathbf{x}_j)=|e_i-e_j|

D(\mathbf{x}_i, \mathbf{x}_j)=|\textrm{logit}(e_i)-\textrm{logit}(e_j)|\quad recommended

D(\mathbf{x}_i, \mathbf{x}_j)=||\hat{Y}_c|\mathbf{x}_i - \hat{Y}_c|\mathbf{x}_j||_2 prognosis score: calculate the difference in estimated control outcome

HSIC-NNM M_w=\textrm{argmax}_{M_w}\textrm{HSIC}(\mathbf{X}_wM_w,Y_w^F)-\mathcal{R}(M_w) : 基於learned mapping space

2. Matching Algorithm:

(1) Nearest Neighbor Matching; (2) Caliper and radius; (3) Stratification and Interval; (4) Kernel and Local Linear. Coarsened Exact Matching (CEM)

因為可以直接拿到匹配到的樣本, 所以基於matching的演算法解釋性很好

3. Feature Selection:

Tree-based Methods

classification trees & regression trees

classification And Regression Tree (CART), Bayesian Additive Regression Trees (BART)

使用樹的優點是,它們的葉子可以沿著訊號快速變化的方向變窄,沿著其他方向變寬. 而當特征空間的維度相當大時,可能會導致功率的大幅增加.

Representation Learning Methods

1. Domain Adaptation Based on Representation Learning

核心思想是把觀測到的group作為training domain (有data和label), 未觀測到的group作為test domain (僅有data), 這樣就構成了UDA的問題.

給定特征提取器 \Phi:X\rightarrow R 以及 分類器 h:X\times{0,1}\rightarrow Y , Integral probability metric (IPM) based objective function:

\min_{h,\Phi}\frac{1}{n}\sum_{i=1}^nr_i\cdot \mathcal{L}\left(h(\Phi(x_i),W_i), y_i\right) + \lambda\cdot R(h) + \alpha\cdot \textrm{IPM}_G\left(\{\Phi(x_i)\}_{i:W_i=0},\{\Phi(x_i)\}_{i:W_i=1}\right)

這裏 r_i=\frac{W_i}{2u}-\frac{1-W_i}{2(1-u)} 修正group size的mismatch, u=\frac{1}{n}\sum_{i=1}^nW_i , R 表示模型復雜度.

實作IPM距離可以采用Wasserstein distance或者MMD.

2. Matching Based on Representation Learning

主要解決matching時受到樣本不相關variables影響而引導到錯誤的匹配上.

(1) 學習對映矩陣 (2) deep feature selection與representation learning結合

3. Continual Learning Based on Representation Learning

(1) 利用 feature representation distillation 保留學到的知識

Multi-task Learning Methods

核心思想用三個branch來處理: shared branch, treated group specific branch 以及 control group specific branch. selection bias可透過 propensity-dropout regularization scheme 來消除.

Beyesian method 消除對 counterfactual outcomes的不確定性

Meta-Learning Methods

Loop:

Step 1: Estimate the conditional mean outcome E[Y|X=x]

Step 2: Derive the CATE estimator based on the difference of results

Methods Relaxting Three Assumptions

1. Relaxing Stable Unit Treatment Value Assumption

2. Relaxing Unconfoundedness Assumption

observed and unobserved confounders

但是使用部份confounder一樣可以得到精確的treatment effect estimator

3. Relaxing Positivity Assumption

Reference

[1] Liuyi Yao, Zhixuan Chu, Sheng Li, Yaliang Li, Jing Gao, Aidong Zhang. A Survey on Causal Inference. ACM Transactions on Knowledge Discovery from Data, 2021. [URL]