Simultaneous Localization and Mapping - 同步定位与建图

1. 【SLAM】Incremental Visual-Inertial 3D Mesh Generation with Structural Regularities

【同步定位与建图】具有结构规律的增量视觉惯性 3D 网格生成

作者:Antoni Rosinol, Torsten Sattler, Marc Pollefeys, Luca Carlone

链接:

https:// arxiv.org/abs/1903.0106 7v2

代码:

https:// github.com/ToniRV/Kimer a-VIO-Evaluation

https:// github.com/MIT-SPARK/Ki mera

英文摘要:

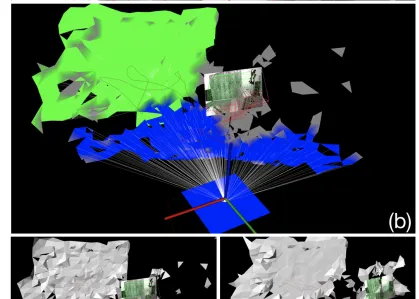

Visual-Inertial Odometry (VIO) algorithms typically rely on a point cloud representation of the scene that does not model the topology of the environment. A 3D mesh instead offers a richer, yet lightweight, model. Nevertheless, building a 3D mesh out of the sparse and noisy 3D landmarks triangulated by a VIO algorithm often results in a mesh that does not fit the real scene. In order to regularize the mesh, previous approaches decouple state estimation from the 3D mesh regularization step, and either limit the 3D mesh to the current frame or let the mesh grow indefinitely. We propose instead to tightly couple mesh regularization and state estimation by detecting and enforcing structural regularities in a novel factor-graph formulation. We also propose to incrementally build the mesh by restricting its extent to the time-horizon of the VIO optimization; the resulting 3D mesh covers a larger portion of the scene than a per-frame approach while its memory usage and computational complexity remain bounded. We show that our approach successfully regularizes the mesh, while improving localization accuracy, when structural regularities are present, and remains operational in scenes without regularities.

中文摘要:

视觉惯性里程计(VIO)算法通常依赖于场景的点云表示,它不会对环境的拓扑结构进行建模。相反,3D网格提供了更丰富但更轻量级的模型。然而,从通过VIO算法三角剖分的稀疏和嘈杂的3D地标构建3D网格通常会导致网格不适合真实场景。为了对网格进行正则化,以前的方法将状态估计与3D网格正则化步骤分离,或者将3D网格限制到当前帧,或者让网格无限增长。相反,我们建议通过在新的因子图公式中检测和执行结构规律来紧密耦合网格正则化和状态估计。我们还建议通过将其范围限制在VIO优化的时间范围内来逐步构建网格;生成的3D网格覆盖了比逐帧方法更大的场景部分,而其内存使用和计算复杂度仍然有限。我们展示了我们的方法成功地规范了网格,同时提高了定位精度,当存在结构规律时,并且在没有规律的场景中保持操作。

2. 【SLAM】LiDARTag: A Real-Time Fiducial Tag System for Point Clouds

【同步定位与建图】LiDARTag:点云的实时基准标记系统

作者:Jiunn-Kai Huang, Shoutian Wang, Maani Ghaffari, Jessy W. Grizzle

链接:

https:// arxiv.org/abs/1908.1034 9v3

代码:

https:// github.com/UMich-BipedL ab/extrinsic_lidar_camera_calibration

https:// github.com/UMich-BipedL ab/LiDARTag

英文摘要:

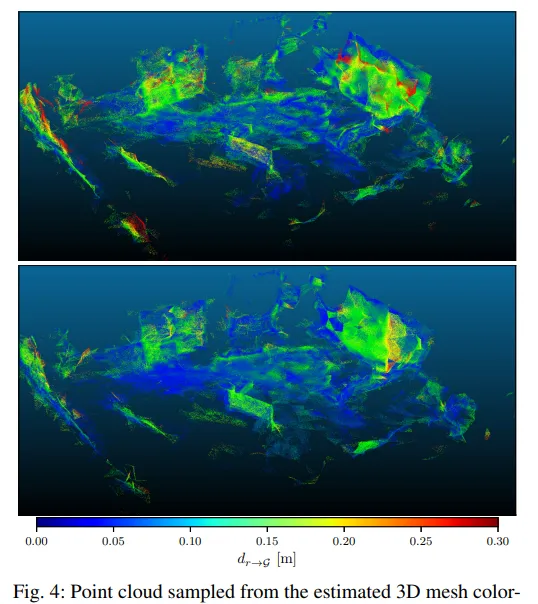

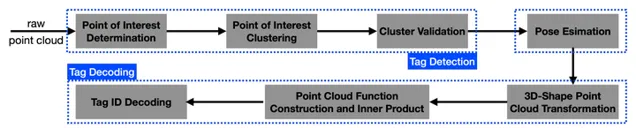

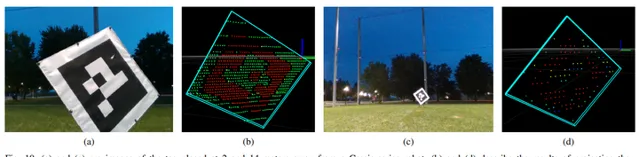

Image-based fiducial markers are useful in problems such as object tracking in cluttered or textureless environments, camera (and multi-sensor) calibration tasks, and vision-based simultaneous localization and mapping (SLAM). The state-of-the-art fiducial marker detection algorithms rely on the consistency of the ambient lighting. This paper introduces LiDARTag, a novel fiducial tag design and detection algorithm suitable for light detection and ranging (LiDAR) point clouds. The proposed method runs in real-time and can process data at 100 Hz, which is faster than the currently available LiDAR sensor frequencies. Because of the LiDAR sensors' nature, rapidly changing ambient lighting will not affect the detection of a LiDARTag; hence, the proposed fiducial marker can operate in a completely dark environment. In addition, the LiDARTag nicely complements and is compatible with existing visual fiducial markers, such as AprilTags, allowing for efficient multi-sensor fusion and calibration tasks. We further propose a concept of minimizing a fitting error between a point cloud and the marker's template to estimate the marker's pose. The proposed method achieves millimeter error in translation and a few degrees in rotation. Due to LiDAR returns' sparsity, the point cloud is lifted to a continuous function in a reproducing kernel Hilbert space where the inner product can be used to determine a marker's ID. The experimental results, verified by a motion capture system, confirm that the proposed method can reliably provide a tag's pose and unique ID code. The rejection of false positives is validated on the Google Cartographer indoor dataset and the Honda pD outdoor dataset.

中文摘要:

基于图像的基准标记在杂乱或无纹理环境中的对象跟踪、相机(和多传感器)校准任务以及基于视觉的同时定位和映射(SLAM)等问题中非常有用。最先进的基准标记检测算法依赖于环境照明的一致性。本文介绍了LiDARTag,一种适用于光检测和测距(LiDAR)点云的新型基准标签设计和检测算法。所提出的方法实时运行,可以处理100Hz的数据,这比目前可用的LiDAR传感器频率更快。由于LiDAR传感器的特性,快速变化的环境照明不会影响LiDARTag的检测;因此,所提出的基准标记可以在完全黑暗的环境中运行。此外,LiDARTag很好地补充并兼容现有的视觉基准标记,例如AprilTags,允许高效的多传感器融合和校准任务。我们进一步提出了最小化点云和标记模板之间的拟合误差的概念,以估计标记的姿势。所提出的方法实现了毫米的平移误差和几度的旋转。由于激光雷达返回的稀疏性,点云被提升为再现内核希尔伯特空间中的连续函数,其中内积可用于确定标记的ID。通过运动捕捉系统验证的实验结果证实,所提出的方法可以可靠地提供标签的姿势和唯一的ID码。在Google Cartographer室内数据集和Honda pD室外数据集上验证了拒绝误报。

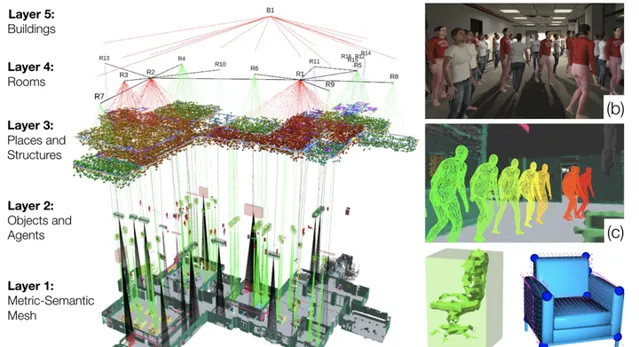

3. 【SLAM】3D Dynamic Scene Graphs: Actionable Spatial Perception with Places, Objects, and Humans

【同步定位与建图】3D 动态场景图:对地点、物体和人类的可操作空间感知

作者:Antoni Rosinol, Arjun Gupta, Marcus Abate, Jingnan Shi, Luca Carlone

链接:

https:// arxiv.org/abs/2002.0628 9v2

代码:

https:// github.com/ToniRV/Kimer a-VIO-Evaluation

https:// github.com/MIT-SPARK/Ki mera

英文摘要:

We present a unified representation for actionable spatial perception: 3D Dynamic Scene Graphs. Scene graphs are directed graphs where nodes represent entities in the scene (e.g. objects, walls, rooms), and edges represent relations (e.g. inclusion, adjacency) among nodes. Dynamic scene graphs (DSGs) extend this notion to represent dynamic scenes with moving agents (e.g. humans, robots), and to include actionable information that supports planning and decision-making (e.g. spatio-temporal relations, topology at different levels of abstraction). Our second contribution is to provide the first fully automatic Spatial PerceptIon eNgine(SPIN) to build a DSG from visual-inertial data. We integrate state-of-the-art techniques for object and human detection and pose estimation, and we describe how to robustly infer object, robot, and human nodes in crowded scenes. To the best of our knowledge, this is the first paper that reconciles visual-inertial SLAM and dense human mesh tracking. Moreover, we provide algorithms to obtain hierarchical representations of indoor environments (e.g. places, structures, rooms) and their relations. Our third contribution is to demonstrate the proposed spatial perception engine in a photo-realistic Unity-based simulator, where we assess its robustness and expressiveness. Finally, we discuss the implications of our proposal on modern robotics applications. 3D Dynamic Scene Graphs can have a profound impact on planning and decision-making, human-robot interaction, long-term autonomy, and scene prediction.

中文摘要:

我们为可操作的空间感知提出了一个统一的表示:3D动态场景图。场景图是有向图,其中节点表示场景中的实体(例如对象、墙壁、房间),边表示节点之间的关系(例如包含、邻接)。动态场景图(DSG)扩展了这一概念,以表示具有移动代理(例如人类、机器人)的动态场景,并包含支持规划和决策制定的可操作信息(例如时空关系、不同抽象级别的拓扑)。我们的第二个贡献是提供第一个全自动空间感知引擎(SPIN),以从视觉惯性数据构建DSG。我们整合了用于物体和人类检测以及姿势估计的最先进技术,并描述了如何在拥挤的场景中稳健地推断物体、机器人和人类节点。据我们所知,这是第一篇协调视觉惯性SLAM和密集人体网格跟踪的论文。此外,我们提供算法来获得室内环境(例如地点、结构、房间)及其关系的分层表示。我们的第三个贡献是在逼真的基于Unity的模拟器中演示所提出的空间感知引擎,我们在其中评估其鲁棒性和表现力。最后,我们讨论了我们的提议对现代机器人应用的影响。3D动态场景图可以对规划和决策、人机交互、长期自主和场景预测产生深远的影响。

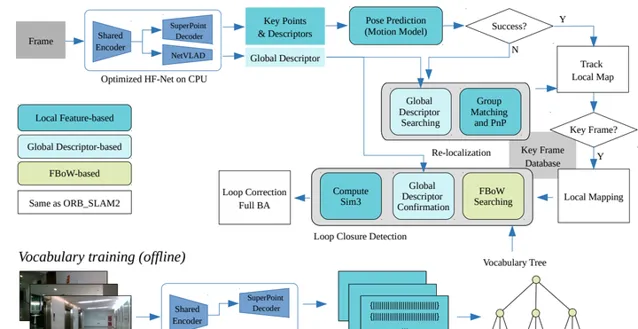

4. 【SLAM】DXSLAM: A Robust and Efficient Visual SLAM System with Deep Features

【同步定位与建图】DXSLAM:具有深度特征的强大且高效的视觉 SLAM 系统

作者:Dongjiang Li, Xuesong Shi, Qiwei Long, Shenghui Liu, Wei Yang, Fangshi Wang, Qi Wei, Fei Qiao

链接:

https:// arxiv.org/abs/2008.0541 6v1

代码:

https:// github.com/cedrusx/dxsl am_ros

https:// github.com/ivipsourceco de/dxslam

英文摘要:

A robust and efficient Simultaneous Localization and Mapping (SLAM) system is essential for robot autonomy. For visual SLAM algorithms, though the theoretical framework has been well established for most aspects, feature extraction and association is still empirically designed in most cases, and can be vulnerable in complex environments. This paper shows that feature extraction with deep convolutional neural networks (CNNs) can be seamlessly incorporated into a modern SLAM framework. The proposed SLAM system utilizes a state-of-the-art CNN to detect keypoints in each image frame, and to give not only keypoint descriptors, but also a global descriptor of the whole image. These local and global features are then used by different SLAM modules, resulting in much more robustness against environmental changes and viewpoint changes compared with using hand-crafted features. We also train a visual vocabulary of local features with a Bag of Words (BoW) method. Based on the local features, global features, and the vocabulary, a highly reliable loop closure detection method is built. Experimental results show that all the proposed modules significantly outperforms the baseline, and the full system achieves much lower trajectory errors and much higher correct rates on all evaluated data. Furthermore, by optimizing the CNN with Intel OpenVINO toolkit and utilizing the Fast BoW library, the system benefits greatly from the SIMD (single-instruction-multiple-data) techniques in modern CPUs. The full system can run in real-time without any GPU or other accelerators.

中文摘要:

强大且高效的同时定位和映射(SLAM)系统对于机器人自主性至关重要。对于视觉SLAM算法,虽然大部分方面的理论框架已经建立,但在大多数情况下特征提取和关联仍然是经验性设计的,并且在复杂环境中可能容易受到攻击。本文表明,使用深度卷积神经网络(CNN)进行特征提取可以无缝整合到现代SLAM框架中。所提出的SLAM系统利用最先进的CNN来检测每个图像帧中的关键点,并且不仅给出关键点描述符,而且给出整个图像的全局描述符。然后,这些局部和全局特征被不同的SLAM模块使用,与使用手工制作的特征相比,对环境变化和视点变化的鲁棒性要强得多。我们还使用词袋(BoW)方法训练局部特征的视觉词汇。基于局部特征、全局特征和词汇,构建了一种高可靠的闭环检测方法。实验结果表明,所有提出的模块都显着优于基线,整个系统在所有评估数据上实现了更低的轨迹误差和更高的正确率。此外,通过使用英特尔OpenVINO工具包优化CNN并利用FastBoW库,系统极大地受益于现代CPU中的SIMD(单指令多数据)技术。整个系统无需任何GPU或其他加速器即可实时运行。

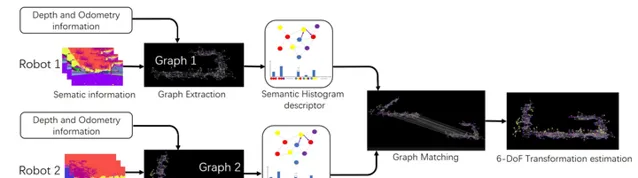

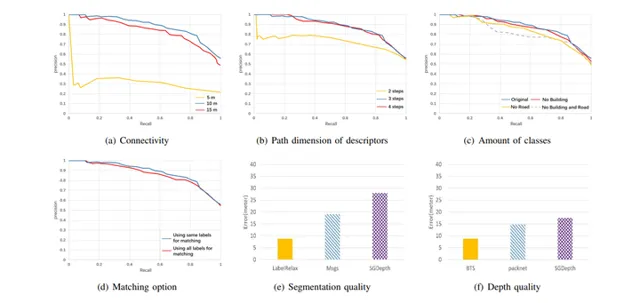

5. 【SLAM】Semantic Histogram Based Graph Matching for Real-Time Multi-Robot Global Localization in Large Scale Environment

【同步定位与建图】大规模环境下基于语义直方图的实时多机器人全局定位图匹配

作者:Xiyue Guo, Junjie Hu, Junfeng Chen, Fuqin Deng, Tin Lun Lam

链接:

https:// arxiv.org/abs/2010.0929 7v2

代码:

https:// github.com/gxytcrc/sema ntic-histogram-based-global-localization

https:// github.com/gxytcrc/Sema ntic-Graph-based--global-Localization

英文摘要:

The core problem of visual multi-robot simultaneous localization and mapping (MR-SLAM) is how to efficiently and accurately perform multi-robot global localization (MR-GL). The difficulties are two-fold. The first is the difficulty of global localization for significant viewpoint difference. Appearance-based localization methods tend to fail under large viewpoint changes. Recently, semantic graphs have been utilized to overcome the viewpoint variation problem. However, the methods are highly time-consuming, especially in large-scale environments. This leads to the second difficulty, which is how to perform real-time global localization. In this paper, we propose a semantic histogram-based graph matching method that is robust to viewpoint variation and can achieve real-time global localization. Based on that, we develop a system that can accurately and efficiently perform MR-GL for both homogeneous and heterogeneous robots. The experimental results show that our approach is about 30 times faster than Random Walk based semantic descriptors. Moreover, it achieves an accuracy of 95% for global localization, while the accuracy of the state-of-the-art method is 85%.

中文摘要:

视觉多机器人同时定位与建图(MR-SLAM)的核心问题是如何高效、准确地执行多机器人全局定位(MR-GL)。困难是双重的。首先是对于显着视点差异的全局定位困难。基于外观的定位方法在大的视点变化下往往会失败。最近,语义图已被用于克服视点变化问题。然而,这些方法非常耗时,尤其是在大规模环境中。这就引出了第二个难点,即如何进行实时全局定位。在本文中,我们提出了一种基于语义直方图的图匹配方法,该方法对视点变化具有鲁棒性,并且可以实现实时全局定位。在此基础上,我们开发了一个系统,可以为同质和异质机器人准确高效地执行MR-GL。实验结果表明,我们的方法比基于随机游走的语义描述符快约30倍。此外,它实现了95%的全局定位准确率,而最先进方法的准确率为85%。

AI&R是人工智能与机器人垂直领域的综合信息平台。我们的愿景是成为通往AGI(通用人工智能)的高速公路,连接人与人、人与信息,信息与信息,让人工智能与机器人没有门槛。

欢迎各位AI与机器人爱好者关注我们,每天给你有深度的内容。

微信搜索公众号【AIandR艾尔】关注我们,获取更多资源❤biubiubiu~